Following the establishment of the Airbus/Fraunhofer FCAS-Forum, a group of Airbus Defence and Space engineers working on AI self-organised and developed a joint paper based on their views and experiences. The following text is the shortened version of a much longer paper that will be used by the FCAS Forum group for further in-depth analysis and discussion as well as for the development of an “ethics by design”-methodology to be applied for a FCAS.

White paper The Responsible Use of Artificial Intelligence in FCAS – An Initial Assessment

A group of Airbus Defence and Space engineers: Massimo Azzano, Sebastien Boria, Stephan Brunessaux, Bruno Carron, Alexis De Cacqueray, Sandra Gloeden, Florian Keisinger, Bernhard Krach, Soeren Mohrdieck

Executive summary

There is very little doubt about the significant impact of artificial intelligence (AI) on just about every aspect of daily life. Controversial discussions, such as in the automotive industry in the context of autonomous driving, have raised public attention which eventually also reached the military domain. Among other contributions, Airbus has positioned itself in the multi-stakeholder debate on the responsible use of AI in the Future Combat Air System (FCAS). Its role in the FCAS Forum is unambiguous: The company is engineering the system and thereby has a direct handle on its technical and operational parameters. The rationale of this paper as well as the corresponding research conducted by the author team was therefore to start thinking about the following key question:

How are ethical and legal implications of the military usage of AI going to affect the technical implementation of the Future Combat Air System (FCAS)?

Put differently, this paper aims at launching a transparent, technical discussion on the responsible and ethical use of AI, which is to be understood as the translation of ethical principles into the technical design domain. This initial assessment serves as the first publication of the on-going work of the author group.

The thorough impact analysis was guided by the following structure:

-

introduction of the so-called Assessment List for Trustworthy AI (ALTAI) methodology as defined by the European Commission’s independent High-Level Expert Group on Artificial Intelligence (AI HLEG)

-

establishment and selection of principal use cases for the application of AI in FCAS

-

application of ALTAI on one of the presented use cases

and yielded the following results:

-

full applicability of ALTAI since it raises questions which are very relevant for FCAS and demonstrates consequences for the design and the operation of the system

-

strict “black/white” ethical categorization of use cases and AI applications is not possible as they are interrelated with regard to their design and operational scheme"

-

the need to both define and integrate Meaningful Human Control, either in-the-loop or on-the-loop, at cardinal design points within FCAS.

In order to continue the discussion, the author team proposes the following way forward:

-

further assessment of the use cases in greater detail

-

introduction of ALTAI methodology to both operational & engineering teams, as the question whether ALTAI in its current version should be extended or tailored to defence applications in general requires a much more detailed analysis

-

training of specialists to perform the ALTAI assessment and provide precise design recommendations to engineering

-

involvement of as many functions and teams as possible, as this will not be a typical “tick in the box” exercise.

1 Set the scene and context

1.1 What is FCAS and why we are concerned with AI and autonomy?

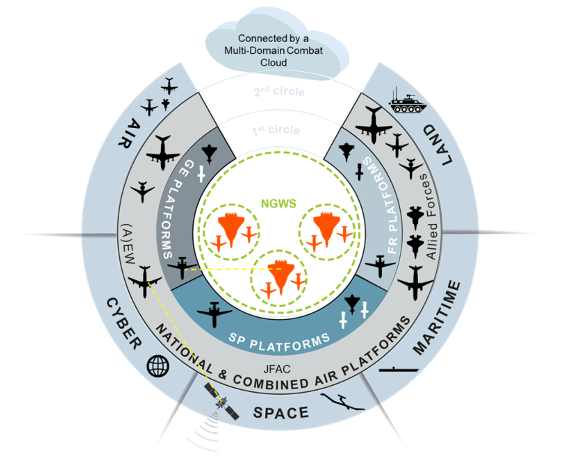

The Future Combat Air System, referred to as FCAS, has the potential to become the most advanced and largest European defence program of the 21st century. It will enable the collaboration of airborne assets in the global context and link these into other military domains (land, maritime, space, cyber). As a “system of systems”, FCAS is not only about a New Generation Fighter (NGF) aircraft, but will provide state-of-the-art collaboration between unmanned and manned systems involved in the combat mission (see figure 1).

In order to accomplish this advancement in combat capabilities, FCAS’ operation will rely on various levels of autonomy (as defined in section 3.1.), realized through the deployment of AI. Apart from the technical challenges of implementing such a sophisticated system, the pending transfer of combat decision-making poses very serious ethical and legal questions.

1.2 Multi-Stakeholder Ethical Debate

In order to foster this debate Airbus has teamed up with renowned German Fraunhofer Institute for Communication, Information Processing and Ergonomics (FKIE) and jointly established a platform to discuss legal and ethical aspects of new technologies in the FCAS (www.fcas-forum.eu). Participants of this group are public authorities like the German Federal Ministry of Defence (MoD) and Federal Ministry of Foreign Affairs (MoFA), but also stakeholders from academia, non-governmental organisations (NGOs) and the church. The ethical debate that has started in 2019 with FCAS entering the “Joint Concept Study” between Germany and France covers a broad variety of legal, political, technical and ethical perspectives. The goal is to operationalize ethically driven requirements and to link them with the Rules of Engagement (RoE) as a fundament to design FCAS (“ethics by design”).

Up to the current status of the debate, the most crucial factor raised by multiple stakeholders is to ensure meaningful human control in combat situations. The trade-off between human intervention and operational efficiency will be one of the key topics to be addressed by the Forum. Generally speaking, lethal autonomous weapon systems (LAWS) cannot be defined unambiguously and are thereby hard to ban or categorize. Nevertheless, the debate, just like the overall FCAS, is at the very beginning and will be further deepened with the conduct of the program.

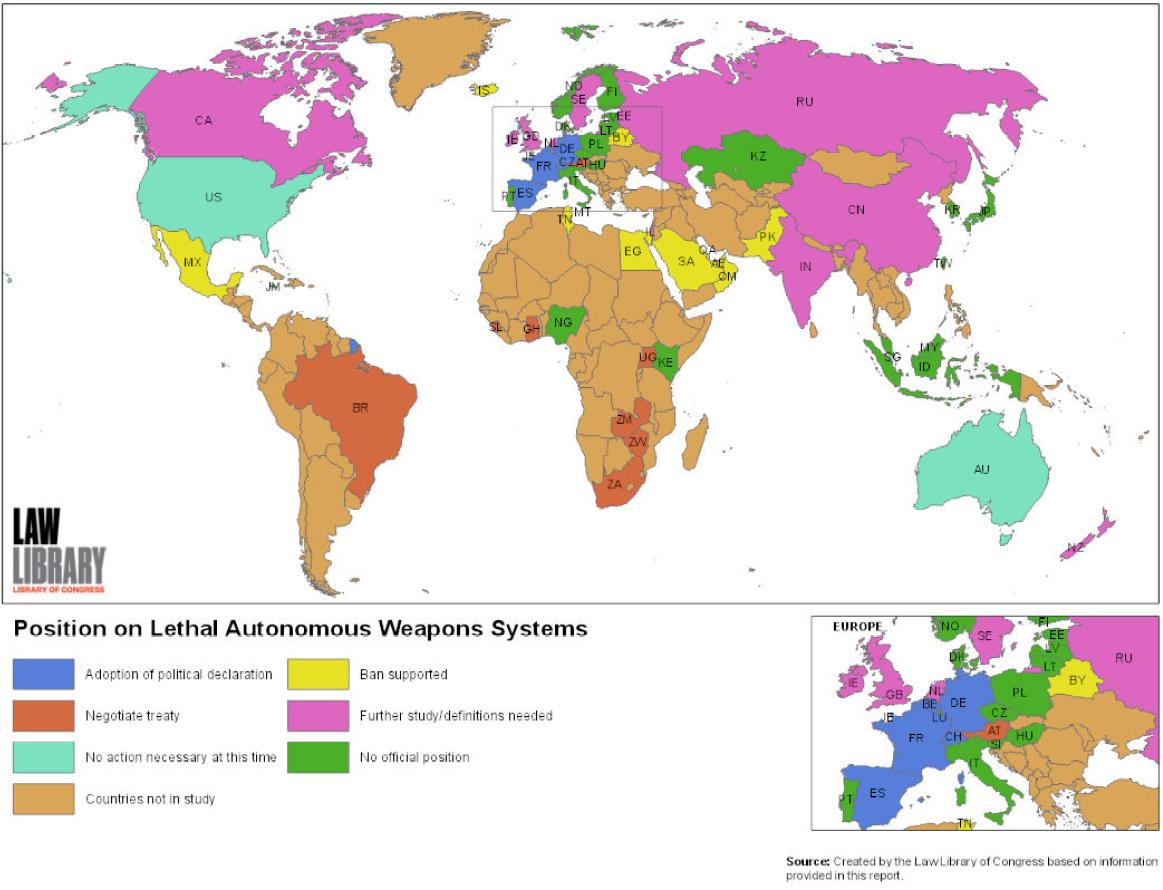

Specific guidance on LAWS is provided by different stakeholders. The Convention on Conventional Weapons (CCW), for example, hosts diplomatic discussions under the auspices of the United Nations which includes states such as the United States, the Russian Federation and China. Besides, numerous NGOs and stakeholders from civil society, academia and international organizations, including the International Committee of the Red Cross (ICRC), the Campaign to Stop Killer Robots NGO coalition and the International Panel on the Regulation of Autonomous Weapons (iPRAW), have contributed to the public discourse. The FCAS Forum is in exchange with stakeholders from the above-mentioned institutions and initiatives and has repeatedly presented its approach. Figure 2 depicts the latest national positions on LAWS (last update 06/11/2019.

Among the countries in blue in figure 2, some are more engaged and take concrete measures to ban LAWS, whereas others simply stated their opposition. Next to other European partners, the figure shows that Germany has adopted a political declaration on LAWS. Even though its government has not officially articulated on United Nations (UN) level that it would support a ban of LAWS, the German coalition agreement from 2018 shows a rather unambiguous position “opposed to autonomous weapon systems that defy human control” with the clear intent that they “want to ban them worldwide.” According to the agreement, “Germany will continue to advocate the inclusion of unmanned armed aircraft in international disarmament and arms control regimes”1.

1.3 Legal Concerns

Beside the wide ethical debate, legal implications have to be considered. Among the most prevalent are the International Humanitarian Law (IHL), the Law of Armed Conflict (LOAC) and International Human Rights Law (IHRL). In early 2015, the United Nations Interregional Crime and Justice Research Institute (UNICRI) established a centre on AI and robotics to centralize expertise on Artificial Intelligence (AI) inside the UN in a single agency for the definition of legal, technical and ethical restrictions. Next to other principles, LAWS should be compliant with the principle of distinction, which requires the ability to discriminate combatants from non-combatants, and the principle of proportionality, which requires that damage to civilians is proportional to the military aim. Moreover, the United Nations Institute for Disarmament Research (UNIDIR) has hosted various workshops to reflect on the different layers of strategic, operational and tactical phases in military decision making and the resulting legal and technical implications.

This paper aims at contributing to the Forum debate by providing a technical view on different ethical principles as well as their application on potential use cases through the exemplary employment of the Assessment List for Trustworthy AI (ALTAI) methodology.

2 Focus on ethics: ALTAI methodology

The AI HLEG was an independent group set up by European Commission's Directorate-General for Communications Networks, Content and Technology (DG CNECT) that kicked off in June 2018 and ended in July 2020. It was composed of 52 appointed experts from academia, small and medium enterprises (SME), NGOs, and large groups including one representative from Airbus. Among its tasks, the group was mandated to define Ethics Guidelines for Trustworthy AI. A first report called “Ethics Guidelines on Artificial Intelligence” was released in April 2019. These Ethics Guidelines are organised around four ethical principles based on fundamental rights:

- Respect for Human Autonomy: Human dignity encompasses the idea that every human being possesses an intrinsic worth, which should never be diminished, compromised or repressed by others – nor by new technologies like AI systems.

- Prevention of Harm: AI systems should neither cause nor exacerbate harm or otherwise adversely affect human beings.

- Fairness: The development, deployment and use of AI systems must be fair.

- Explicability: Processes need to be transparent, the capabilities and purpose of AI systems openly communicated, and decisions – to the extent possible – explainable to those directly and indirectly affected.

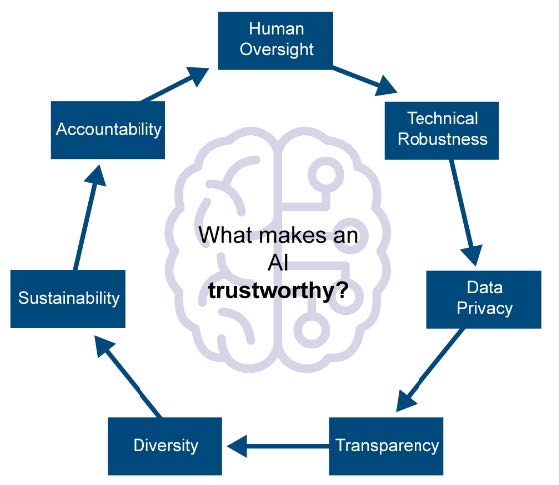

Requirements for the development of Trustworthy AI have been organised along seven categories as depicted in figure 3.

An initial assessment list comprising 131 questions has been proposed as a practical tool to check that the AI system being developed could be qualified as trustworthy. This initial report was complemented with another document called the “Final assessment list for trustworthy artificial intelligence” released in July 2020 together with “ALTAI - Assessment List for Trustworthy Artificial Intelligence” – a Web-based tool that supports a voluntary ethics check. It is recommended to involve a multidisciplinary team of people when applying the ALTAI methodology.

3 Technical/Operational Domain

In this part of the paper the terms AI as well as autonomy are discussed and set into context with legal aspects and the military decision making life-cycle as it will get embodied in FCAS. Considering the ethical debate, applicable design principles are formulated and their technical enablers are derived.

3.1 AI, Automation & Autonomy

A system employing AI can be thought of as acting humanly, thinking humanly, acting rationally and thinking rationally. Together, these system characteristics define the term AI. In contrast, the European Defence Agency (EDA) has recently defined AI as ”[...] the capability provided by algorithms of selecting, optimal or sub-optimal choices from a wide possibility space, in order to achieve specific goals by applying different strategies including adaptability to the surrounding dynamical conditions and learning from own experience, externally supplied or self-generated data”3.

As for AI, there are a various definitions and categorisation schemes around in the literature for the terms autonomy and automation. In a general sense, e.g., in philosophy and ethics, the term autonomy has a different connotation than in the technical domain, e.g., for robotics. In its original meaning autonomy is about self-governance of individuals, whereas so-called technical autonomy is about the ability of an artificial system to perform even highly complex tasks in an automated fashion. The term autonomy as applied in this paper is to be understood through its technical implications, i.e. a high degree of automation, as defined in frameworks such as the Autonomy Levels for Unmanned Systems (ALFUS) or the levels for autonomous driving as defined by the Society of Automotive Engineers (SAE). To some extent, these levels share common aspects around the degree of human involvement in the operational Observe, Orient, Decide and Act (OODA)-Cycle:

- Human-in-the-loop: Human takes decisions and acts

- Human-on-the-loop: Systems take decisions and act, human is monitoring and can intervene

- Human-out-of-the loop: Systems take decisions and act, no human intervention possible

3.2 The Military Perspective

International law governs relations between states. LOAC, also known as the Law of War (LOW) or IHL, is the part of international law that governs the conduct of armed conflict. LOAC is a set of rules which seeks, for humanitarian reasons, to limit the negative implications of armed conflict. It defines the rights and obligations of the parties to a conflict in the conduct of hostilities.4

Digitalization and specifically the use of AI are expected to have a huge impact in the defence domain and thus on the conduct of military operations, which includes the increased amount of available data and the accelerated pace in adaptive processes and decision-making within the real-time and non-real-time phases of a military mission.

The real-time execution of a mission can be characterised by the sequence of operational activities Find / Fix / Track / Target / Engage / Assess, i.e., the so called F2T2EA-Cycle. The lawfulness of performing an attack is governed by the applicable Rules of Engagement (RoE). RoE are directives to military forces (including individuals) that define the circumstances, conditions, degree, and manner in which force, or actions which might be construed as provocative, may be applied. RoE and their application never permit the use of force which violates applicable international law.

3.3 AI Applications in FCAS

The list of potential AI-driven applications, both general within the military domain and particular for FCAS, spreads across the full life-cycle of military products and services. Moreover, it is expected to be fertilized to a large extent from non-military industrial and commercial areas for AI-based applications.

Customer expectations on the use of AI and autonomy in FCAS are dispersed across various press statements, publications and official documents. Strong emphasis is placed on information superiority and faster decision-making, accelerating the OODA loop, while also pointing out the importance of human control and the need for enhanced protection of own forces and civilians.

However, major risks and pain points become apparent when linking this to the debate on LAWS, which is not only shaped by legal aspects around IHL and IHRL, but also by the term Meaningful Human Control5. The use of AI is deemed critical with respect to most of these aspects. Hence, a key factor will be the implementation of the ability to control operational parameters, conditions and constraints through space and time. This will have to be done in a flexible manner that allows the tailoring of these settings according to the type of the mission and the applicable RoE.

Means to enable human supervision and intervention will be required through the employment of a clear, unambiguous and coherent concept for human accountability, which enables the user to understand the level of accountability and ethical responsibility for every decision that is made. Any design will have to be implemented with strict adherence to predictability and reliability principles in systems engineering, using established concepts for verification, validation, risk assessment, and failure/hazard analysis.

To do so, the required enablers for ethics by design can be grouped into two categories:

-

Technical enablers: New technologies and technical concepts which enable the realization of improved capabilities.

-

Non-technical enablers: Processes, methods and tools that take into account the characteristics, specialities, opportunities and risks of AI-enabled autonomy.

4 Use Cases in the FCAS Context

Eight major AI use cases have been identified for FCAS. This set is not to be understood as complete and just presents a selection out of the full set of potential use cases, which is believed to be representative and relevant for the scope of this paper:

-

Mission Planning and Execution (MPE) subsumes two phases of a military mission: the pre-planning ahead and the re-planning during the actual execution phase;

-

Target Detection, Recognition, and Identification (DRI) covers the use of AI technology to detect and identify potential targets as part of the Find and Fix activities of the F2T2EA-Cycle;

-

Situational Awareness (SA) aims to assemble a Digital Situation Representation (DSR) of the overall tactical situation by means of a common relevant operational picture (CROP) in order to support orientation, decision making, and planning; either for a human operator using tactical displays or for automated functions directly accessing the digital data such as MPE;

-

Flight Guidance, Navigation, and Control (GNC) covers the use of AI techniques, specifically machine learning, to realise complex guidance and flight control behaviour for swarms of platforms;

-

Threat Assessment and Aiming Analysis (TAA) covers the use of Artificial Neural Networks (ANN) for the purpose of threat analysis and weapon aiming;

-

Cyber Security and Resilience (CSR) makes use of AI techniques, specifically data analytics, to detect anomalies and adversarial activities in cyberspace;

-

Operator Training (OPT) takes benefit of AI capabilities in order to train system operators;

-

Reduced Life Cycle Cost (RLC) employs AI techniques to analyse big data in the perimeter of production, maintenance, logistics and overall system lifecycle.

While some of the use cases to the present day seem to be relatively uncritical from an ethical point of view, i.e., RLC, OPT, and CSR, others incorporate aspects which might become critical when used improperly or nor sufficiently boxed by human control and intervention mechanisms.

5 Example of application of ALTAI methodology on a use case

As presented in chapter 2, the ALTAI methodology supports the development of trustworthy AI-based systems with an exhaustive list of questions to check the ethical characteristics at different steps of the development.

This methodology has been applied to one of the use cases (Target Detection, Recognition and Identification) by focussing on selected chapters of ALTAI (4. Transparency, 7. Accountability).

To summarize, the ALTAI methodology for Transparency and Accountability is highly relevant for the Use Case Target Detection, Recognition, and Identification (DRI): around 90% of the questions are totally applicable. A possible future work would be to focus on the other requirements of Trustworthy AI:

-

Human Agency and Oversight

-

Technical Robustness and Safety

-

Privacy and Data Governance

-

Diversity, Non-discrimination and Fairness

-

Societal and Environmental Well-being.

Our recommendation is that the technical stakeholders of DRI apply the methodology on the use case themselves with our support to correctly interpret the questions. For every question we also provided some specific comments with respect to the use case as well as some recommendations.

6 Next Steps

Numerous open points, such as the following, will require elaborate assessment in the coming years:

-

It will be a significant challenge to reduce potential bias in the development of algorithms;

-

The acceleration of decision-making may contribute to the escalation, rather than the de-escalation, of a combat situation, since the actors would see their respective windows of opportunity shrinking;

-

High-performance algorithms are not immune to being misled by fairly traditional means of espionage and deception;

-

AI-generated analyses and inferences could gain an over-sized degree of authority in political decision-making;

-

Unmanned Systems' operational roles and concepts for meaningful human control need to be defined: intelligence gathering, communications hub, weapon release, handling loss of data link, recovery of armed carriers, handling of Target of Opportunities and so on;

-

Complex AI may be capable of predicting or at least pre-defining scenarios without the underlying logic, considerations, and prioritisation being necessarily comprehensible.

As it can be anticipated that the debate around LAWS will continue over the next decade, and maybe even longer, before a binding international regulatory framework might be established, a conservative attitude towards autonomy in weapon system is deemed mandatory; sticking to principles of safety and reliability as they can be found in aviation engineering in general. Clear requirements will have to be formulated to allow the successful incorporation of high level ethical principles, guidelines and red lines in FCAS, which finally will have to be translated at technical level. Way down the path of technological evolution, the individual use cases presented here will almost inevitably converge towards more and more autonomy in sub-functions of the system. For FCAS, however, there is still the opportunity to go for a European way that keeps the overall system under control of an informed, aware, and accountable human operator, which is equipped with means of control that are meaningful to the required and specified level.

To do so, the dialogue of experts and advisors from across academia, military domain, politics, and civil society as established by the FCAS Forum is to be continued. Based on insights gained therein as well as on cross-domain collaborative research on European and international level, it will be possible to adapt policies, review processes and organizational setups in industry, such that FCAS and its fundamental technologies, specifically AI, will be used responsibly and ethically in the future.

The door to make the choice now is wide open from a technical and engineering perspective; we just need to go through it.

- Translated from: Coalition Agreement Germany 2018, „Ein neuer Aufbruch für Europa, Eine neue Dynamik für Deutschland, Ein neuer Zusammenhalt für unser Land“, Koalitionsvertrag zwischen CDU, CSU und SPD, 19. Legislaturperiode, 7027-7028 & 7030-7031, 12 March 2018↩

- Lloret Egea, Juan, “Regulation of Artificial Intelligence in 7 global strategic points and conclusions”, European AI Alliance, Open Library, doi:10.13140/RG.2.2.20218.44483, November 2019↩

- European Defence Agency, “Artificial Intelligence in Defence - A Definition, Taxonomy and Glossary”, Annexes to EDA SB 2019/065. EDA presented a Food for Thought paper to the Steering Board (SB) in R&T Directors’ composition in December 2018, providing an overview of the field and the Agency’s existing activities within the relevant CapTechs (Ref. EDA Food for Thought paper SBID 2018/05 “Digitalization and Artificial Intelligence in Defence”). EDA has proposed a common taxonomy as the first step for further development, coordination and promotion of collaboration on AI (Ref. doc. no. EDA201901130, R&T POC meeting on 24 January 2019: Operational conclusions on the AI topic, 29 January 2019)."↩

- NATO STANAG 2449, “Training in the Law of Armed Conflict”, ATrainP-2, Edition B Version 1, June 2020↩

- Amanda Musco Eklund, “Meaningful Human Control of Autonomous Weapon Systems, Definitions and Key Elements in the Light of International Humanitarian Law and International Human Rights Law”, FOI, Swedish Defence Research Agency, FOI-R--4928--SE, February 2020↩